Docker and PaaS – together perfect!

In the IT world, Docker has now established itself as a de facto standard and is enormously important due to its functionality in the modern development environment. It is free software developed by Docker, Inc. Docker is based on Linux containers in which individual services are isolated. So it is a platform for packaging, distributing and executing applications. This is simplified by features such as operating system independence for data transport.

Why should we talk about Platform-as-a-Service (PaaS) in this context? A PaaS service simplifies the productive operation of applications such as critical database systems and increases security because the cloud provider takes care of the smooth operation. The combination of Docker and PaaS has many good reasons, which I would like to show you below.

Docker Platform

Many companies are always looking for the best solutions to save time, costs and above all resources. This is exactly where Docker comes in, as this technology offers a convenient solution for many demanding processes in the development process. Docker is an open source software and uses the so-called container technology. It separates different levels from each other by running software components separately and independently on a single container. For example, database and web server would run in different containers.

The isolation is achieved by the mechanisms of the Linux kernel such as Linux namespace and control groups (Linux-Cgroups). Linux namespace personalize the resources such as files, processes, network interfaces, hostname, etc. on the system. Linux groups have the task of restricting the amount of resources such as CPU, memory, network bandwidth, etc. in participating processes.

Isolation makes it easier to move and replicate services, since settings no longer need to be configured manually. Once a Docker image and the runtime environment are stored, this environment can be shared with other people without the need to set up the environment again. The further steps can simply build on this.

Difference to virtualization

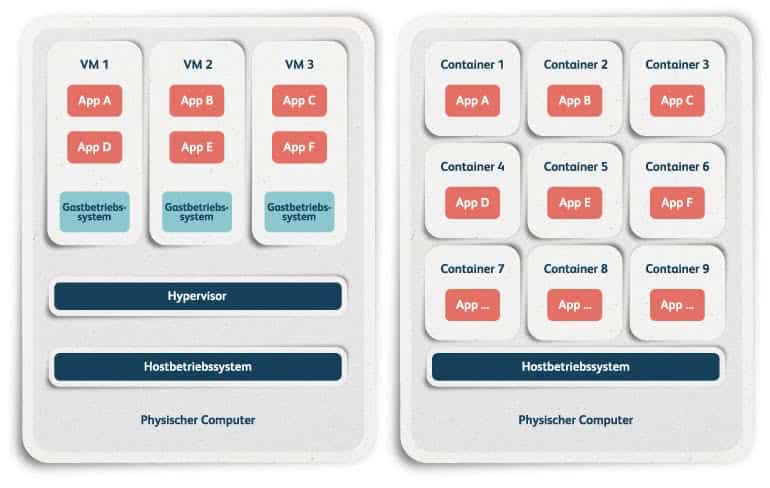

Virtual machines are independent compute units that are simulated on a hardware system. Thus, each system has an independent operating system that is tailored to the requirements. This means that the operating system runs on the server hardware of the host system. Here a so-called hypervisor for abstraction of hardware is used as an additional layer for virtual systems. Furthermore the adaptation of the environment of each virtual machine takes time.

Disadvantages of virtualization

- worse performance than with physically existing hardware

- Uses a lot of resources such as memory in RAM and on the hard disk, as each system has its own instance of the operating system.

This makes the virtual machines in operation very expensive, time consuming and complicated. This is where Docker offers a better technology.

You can read more about virtualization in our glossary entry What is virtualization?

Docker Workflow

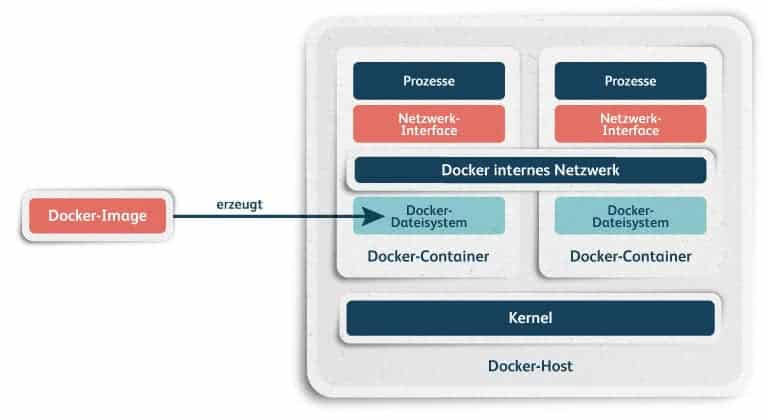

As already indicated, Docker is a good alternative to virtualization by using container technologies. Docker containers have their own file system and share the operating system kernel on the Docker host. This means that processes running on containers are displayed on process tables of the operating system. The containers are managed by the so-called Docker daemon.

When a Docker container starts, the first version is created by the file system, the Docker image. The Docker image opens a further layer, into which the container writes its own data. Docker images consist of programs, libraries and data. From the Docker image, any number of containers can be created.

The file system contains the so-called Docker file. The Dockerfile is a shell script that installs the required software from e.g. Docker-Hub. It describes how a Docker image – also called base image – can be created. A special advantage of Dockerfile is the simplicity of its creation, so that you can create it with a few commands (FROM, RUN, CMD, COPY, EXPOSE). Afterwards, the Docker image can be exported and saved to a Docker registry.

In contrast to virtualization, there is no emulation layer or hypervisor. Furthermore, each container has its own network interface with its own IP address. This is only accessible from the Docker internal network.

Therefore, on the one hand, the same port can be used in each Docker container, but on the other hand, many arbitrary ports can be used. The network interface is located in a subnet that contains all containers. The default configuration of the subnet does not allow access to the Docker from outside. The possible solution is to bind the port of the Docker container to the port of the Docker host.

Advantages of Docker

All in all, the Docker workflow can facilitate processes in many companies, especially with DevOps approaches. Furthermore, Docker simplifies communication between developer and administrator teams or between different development teams of the same project by using the registry as a transfer point. You can focus on software development without having to worry about the deployment of the environment.

For example, thanks to Docker technology, you no longer have to worry about what Linux distribution you are using, what library version is used, or how the software is compiled or delivered.

Platform-as-a-Service

Within a PaaS service, customers have the opportunity to create an application and run it on the runtime environment provided by the cloud provider, which can be accessed via API. More specifically, the cloud provider takes care of the smooth running, management and availability of the service offered.

What are the advantages of the combination Docker and PaaS?

Docker technology is similar to PaaS services in terms of service isolation. In both cases, the applications are in the foreground and the role of the operating system recedes into the background. Docker containers can be used to conveniently distribute the applications of the PaaS service. In summary, the developer creates an image of PaaS, which he or she uploads to the registry so that it is available to others. The image can be transferred to any number of computers running Docker. Docker then builds isolated containers based on the image.

As already mentioned, the inaccessibility of Docker from the outside is a small challenge. The difficulty is that Docker was originally designed for IPv4. In order for Docker to communicate via IPv6, it can be entered manually in the Docker network interface.